Introduction

Data centers today are the backbone of digital transformation, powering applications from cloud computing to artificial intelligence (AI) and high-performance computing (HPC).

As workloads grow in complexity and demand, traditional CPU-based systems often struggle to provide the required performance and efficiency.

This is where Field Programmable Gate Arrays come into play. Offering reconfigurable hardware, low-latency processing, and energy efficiency, they are becoming a critical component in modern data centers.

In this blog, we explore how FPGA acceleration is transforming data centers and enabling faster, smarter AI and HPC workloads.

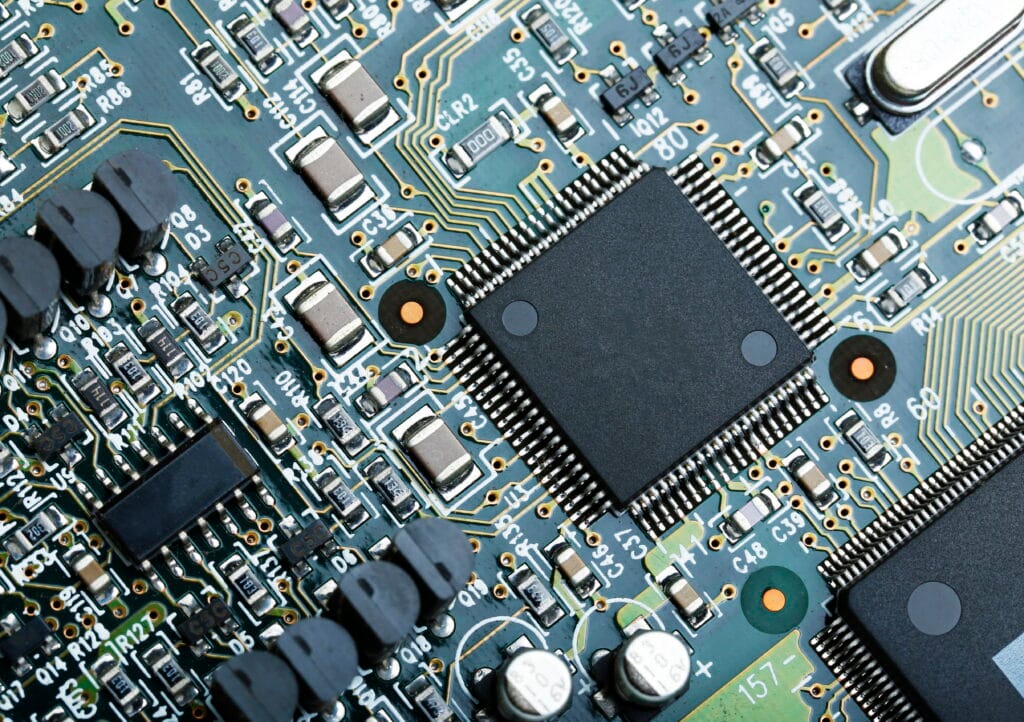

What is FPGA Acceleration?

It involves offloading specific compute-intensive tasks from a general-purpose CPU to a programmable FPGA.

Unlike CPUs or GPUs, these allow developers to configure hardware logic tailored to the workload, enabling parallel processing, optimized pipelines, and reduced latency.

Key benefits include:

- Custom Hardware Optimization: It can be tailored for specific algorithms or tasks.

- Low Latency Processing: Tasks execute faster due to parallelism and hardware-level optimization.

- Energy Efficiency: Reduced power consumption per computation compared to general-purpose processors.

Role of FPGAs in AI Workloads

Artificial intelligence applications, particularly deep learning, involve massive amounts of matrix multiplications and parallel operations. FPGAs accelerate these operations effectively:

- Inference Acceleration: It processes neural network inference faster than CPUs for specific workloads, reducing response times in applications like image recognition or recommendation engines.

- Flexible Precision Support: AI workloads often benefit from mixed-precision computations. FPGAs can be customized to support INT8, INT16, or FP16 arithmetic, balancing speed and accuracy.

- Real-Time AI Applications: Edge and cloud AI applications, such as video analytics and natural language processing, require near-instant processing. FPGA acceleration meets these low-latency requirements.

Role of FPGAs in High-Performance Computing

High-performance computing workloads, including scientific simulations, financial modeling, and bioinformatics, demand extreme compute power:

- Parallel Computing: It handles massive parallel workloads efficiently by implementing custom pipelines for repetitive tasks.

- Optimized Data Transfer: FPGA-based accelerators reduce data movement overhead between memory and CPU, improving throughput.

- Customizable Architecture: HPC applications often require specialized arithmetic operations that FPGAs can implement directly in hardware.

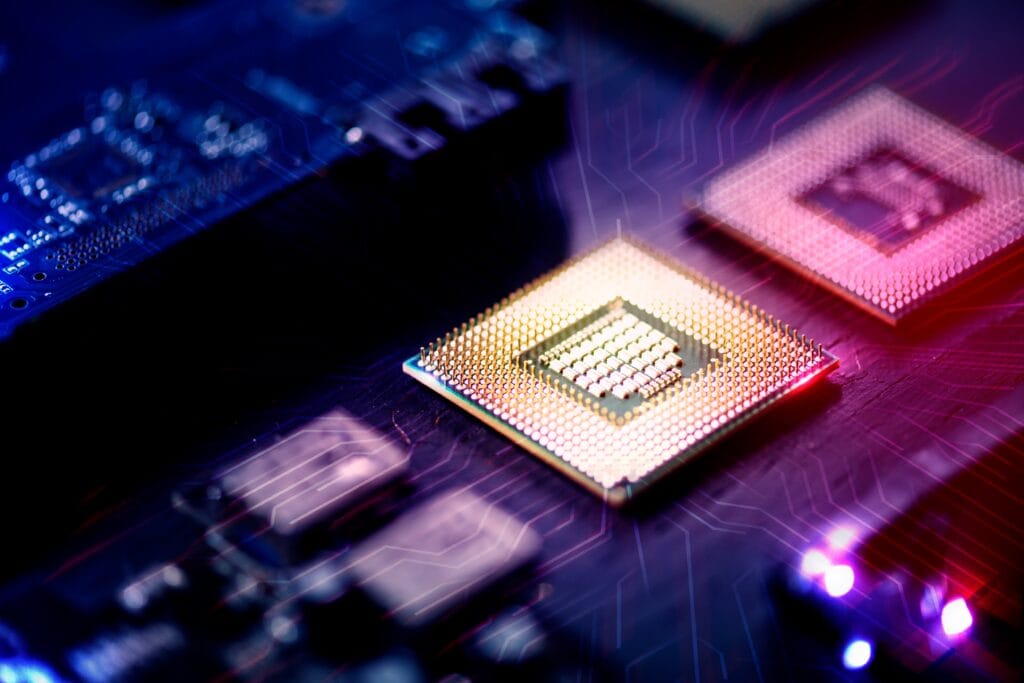

FPGA vs CPU vs GPU in Data Centers

Understanding why FPGAs are chosen for acceleration requires comparing them with CPUs and GPUs:

- CPU: General-purpose, flexible, but limited in parallelism and energy efficiency for large-scale AI/HPC tasks.

- GPU: Excellent for highly parallel workloads like deep learning, but less energy-efficient and sometimes rigid for specialized workloads.

- FPGA: Combines flexibility and parallelism, with low latency and energy efficiency. Ideal for tasks requiring custom pipelines or near-real-time processing.

Use Cases of FPGA Acceleration in Data Centers

FPGA acceleration is being deployed in various data center scenarios:

- Cloud AI Services: Companies like Microsoft and Amazon integrate it to accelerate AI inference in cloud services.

- Financial Computing: High-frequency trading and risk analysis benefit from FPGA’s low-latency computation.

- Scientific Research: Simulation-heavy tasks like weather modeling, particle physics, and genomics utilize FPGA acceleration for performance gains.

- Data Compression and Encryption: It performs real-time compression and encryption with minimal latency.

Challenges in FPGA Adoption

While FPGAs offer significant advantages, adopting them in data centers comes with challenges:

- Complex Development: Designing efficient FPGA accelerators requires hardware knowledge, which may be a barrier for software-focused teams.

- Toolchain and Integration: FPGA programming and integration into existing data center workflows can be complex.

- Scalability: Scaling FPGA deployments across large server farms requires careful planning and resource management.

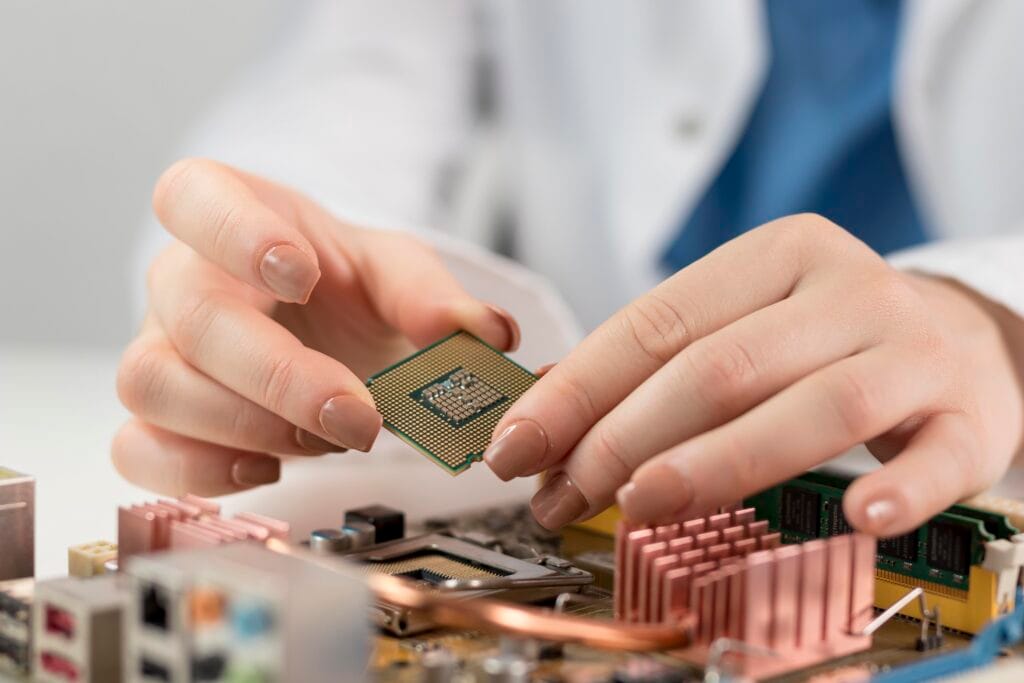

The Future of FPGA Acceleration

The demand for it in data centers is growing due to AI, HPC, and edge computing trends.

Emerging technologies like CXL (Compute Express Link) and heterogeneous computing architectures make FPGA integration easier and more powerful.

With advancements in high-level synthesis (HLS) and AI-assisted FPGA design, development is becoming faster and more accessible, paving the way for broader adoption.

Conclusion

FPGA acceleration is revolutionizing data center computing by providing tailored, energy-efficient, and low-latency solutions for AI and HPC workloads.

As data demands continue to grow, leveraging it allows organizations to optimize performance, reduce costs, and unlock new possibilities in real-time data processing.

By integrating FPGA technology into data center infrastructure, companies can stay ahead in a competitive landscape driven by AI, HPC, and next-generation cloud services.

![What is FPGA Introduction to FPGA Basics [2023] computer-chip-dark-background-with-word-intel-it](https://fpgainsights.com/wp-content/uploads/2023/06/computer-chip-dark-background-with-word-intel-it-300x171.jpg)